This month, the BME Department’s newsletter featured highlights of what BME students did over the summer. The newsletter also included several new grants and publications from members of the BME Department. The full newsletter can be found here!

BME Department July/August Newsletter

This month, the BME Department’s newsletter featured lab member Destie Provenzano’s recent paper publication. The newsletter also included a note from Dr. Zara in regards to GW’s first ever virtual fall semester and faculty highlights. The full newsletter can be found here.

BME Department Inaugural Monthly Newsletter

This month, the BME Department published its first monthly e-newsletter. Professor Loew, chair of the department, wrote the introduction for the newsletter and congratulated our recent class of graduates from the BME Department. The full newsletter can be found here.

Students Present at 48th Annual IEEE AIPR 2019 Workshop

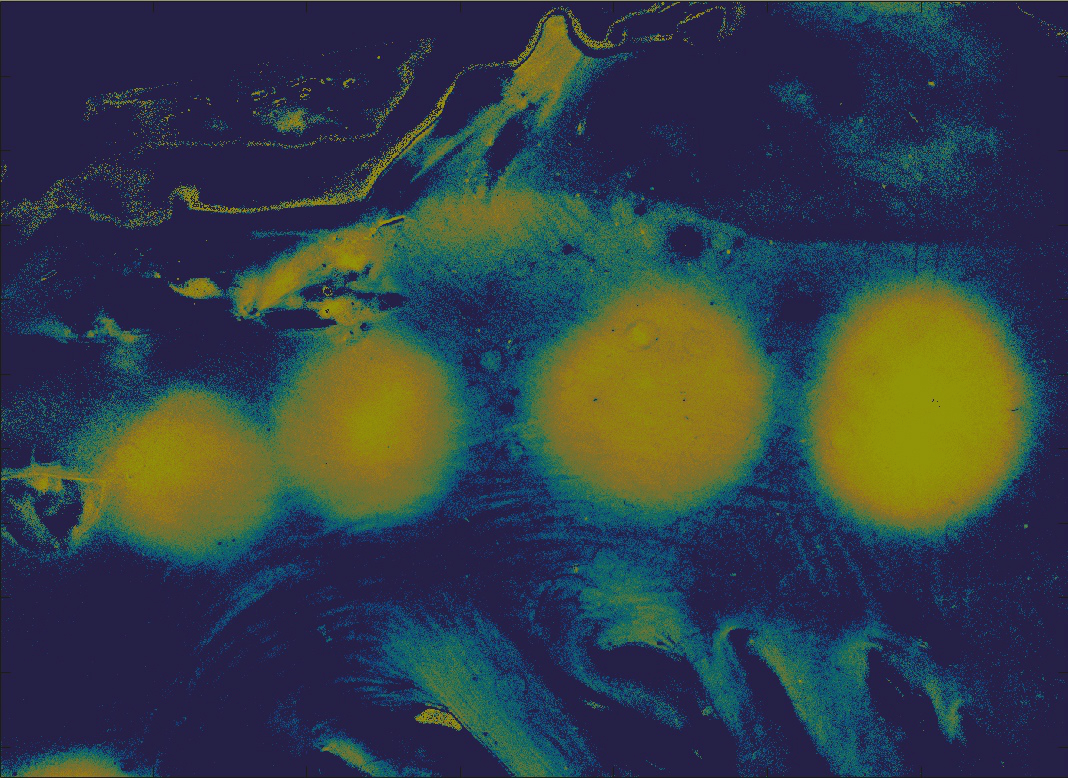

Our students, Shuyue (Frank) Guan and Ange Lou, have attended the 48th Annual IEEE AIPR 2019: Cognition, Collaboration, and Cloud in Washington DC, October 15th–17th. The Applied Imagery Pattern Recognition (AIPR) workshop sponsored by IEEE is to bring together researchers from government, industry, and academia across a broad range of disciplines. The 2019 IEEE AIPR Workshop explored cognitive applications of vision, dynamic scene understanding, machine learning, the associated supporting applications, and the system engineering to support the dynamic workflows. Shuyue gave a presentation about the evaluation of Generative Adversarial Network (GAN) Performance and Ange presented his work about segmentation of infrared breast images using Deep Neural Networks. A brief summary of Shuyue’s project and presentation: Recently, a number of papers address the theory and applications of the Generative Adversarial Network (GAN) in various fields of image processing. Fewer studies, however, have directly evaluated GAN outputs. Those that have been conducted focused on using classification performance and statistical metrics. In this paper, we consider a fundamental way to evaluate GANs by directly analyzing the images they generate, instead of using them as inputs to other classifiers. We consider an ideal GAN according to three aspects: 1) Creativity: non-duplication of the real images. 2) Inheritance: generated images should have the same style, which retains key features of the real images. 3) Diversity: generated images are different from each other. Based on the three aspects, we have designed the Creativity-Inheritance-Diversity (CID) index to evaluate GAN performance. We compared our proposed measures with three commonly used GAN evaluation methods: Inception Score (IS), Fréchet Inception Distance (FID) and 1-Nearest Neighbor classifier (1NNC). In addition, we discussed how the evaluation could help us deepen our understanding of GANs and improve their performance. Check the poster for more information of this project. A brief summary of Ange’s project and presentation: Convolutional and deconvolutional neural networks (C-DCNN) model had been applied to automatically segment breast areas in breast IR images in our previous studies. In this study, we applied a state-of-the-art deep learning segmentation model: MultiResUnet, which consists of an encoder part to capture features and a decoder part for precise localization. We also expanded our database to include 450 images, acquired from 14 patients and 16 volunteers. We used a thresholding method to remove interference in the raw images and remapped them from the original 16-bit to 8- bit, and then cropped and segmented the 8-bit images manually. Experiments using leave-one-out cross-validation (LOOCV) and comparison with the ground-truth images by using Tanimoto similarity show that the average accuracy of MultiResUnet is 91.47%, which is about 2% higher than that of the C-DCNN model. The better accuracy shows that MultiResUnet offers a better approach to perform the infrared breast segmentation task than our previous model.